Google’s Gemini 2.0 Models: A Counterstrike to DeepSeek’s Offensive

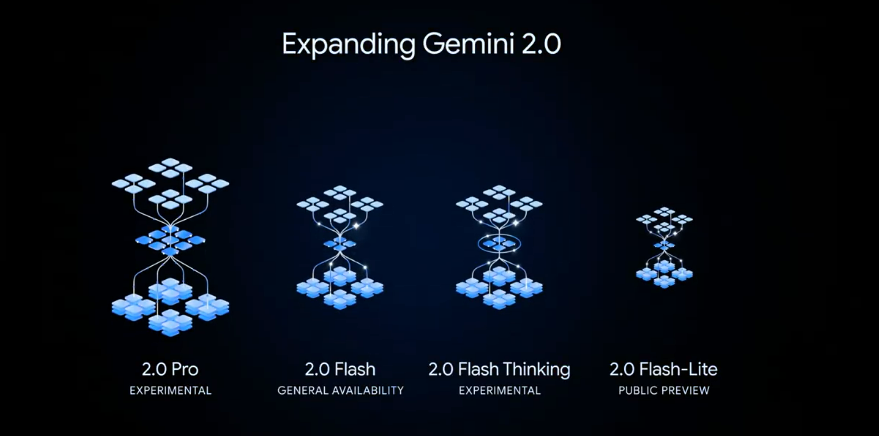

In the ever-intensifying AI arms race, Google has decided it’s time to roll up its sleeves, put on its game face, and unleash its latest arsenal of AI models. Under pressure from the rising star DeepSeek R1, Google has fully released Gemini 2.0 Flash, Gemini 2.0 Flash-Lite, and the experimental version of its flagship large model, Gemini 2.0 Pro. Oh, and let’s not forget the reasoning powerhouse, Gemini 2.0 Flash Thinking, which has also made its debut in the Gemini app. If this were a chess game, Google just moved its queen into play. Let’s dive into the details and see if this gambit is enough to outmaneuver DeepSeek.

The AI Battlefield: DeepSeek vs. Gemini

Before we dive into Google’s shiny new toys, let’s set the stage. DeepSeek R1, a model developed in China, has been making waves with its open-source approach, technical precision, and long-term memory capabilities. It’s like the AI version of a Swiss Army knife—versatile, reliable, and surprisingly affordable. DeepSeek has been stealing the spotlight, leaving Google scrambling to reclaim its throne.

DeepSeek’s strengths lie in its ability to handle sustained dialogue, complex tasks, and large-scale data processing without breaking a sweat. Meanwhile, Google’s Gemini models have historically been more specialized, excelling in specific domains but lacking the versatility of their rival.

Now, with the release of Gemini 2.0 models, Google is aiming to flip the script. Let’s see what these models bring to the table.

Gemini 2.0 Flash: The Workhorse

First up, we have Gemini 2.0 Flash, Google’s “workhorse” model. This isn’t just a fancy nickname—it’s designed for developers who need low latency and high efficiency. Think of it as the AI equivalent of a Toyota Corolla: reliable, efficient, and gets the job done without any unnecessary frills. But don’t let its practicality fool you; this model packs a punch.

Gemini 2.0 Flash is now generally available through the Gemini API in Google AI Studio and Vertex AI. It’s also accessible to all Gemini app users on desktop and mobile. The model has been fine-tuned to deliver improved performance on key benchmarks, and Google is already teasing upcoming features like image generation and text-to-speech capabilities.

Gemini 2.0 Flash-Lite: Small but Mighty

For those who want a more cost-effective solution, Google has introduced Gemini 2.0 Flash-Lite. This model is like the younger sibling of Flash—smaller, faster, and cheaper, but still capable of holding its own in a fight. Flash-Lite retains the speed and cost-efficiency of its predecessor, Gemini 1.5 Flash, while delivering better quality and outperforming it on most benchmarks.

Flash-Lite is particularly appealing for developers and businesses looking to optimize costs without sacrificing performance. It’s available in preview mode on AI Studio and Vertex AI, making it a great entry point for those new to Google’s AI ecosystem.

Gemini 2.0 Pro: The Flagship

Now, let’s talk about the big guns: Gemini 2.0 Pro. This experimental model is Google’s most advanced AI yet, designed for coding performance and handling complex prompts. It boasts a staggering 2-million-token context window, meaning it can process about 1.5 million words in a single go. To put that into perspective, it could analyze the entire Harry Potter series in one prompt and still have room left over for a fanfiction sequel.

Gemini 2.0 Pro also integrates external tools like Google Search and code execution, making it a versatile tool for developers and researchers. It’s currently available in experimental mode to Gemini Advanced subscribers and through Google AI Studio and Vertex AI.

Gemini 2.0 Flash Thinking: The Reasoning Model

Finally, we have Gemini 2.0 Flash Thinking, a model that’s as close as it gets to an AI that “thinks like a human.” This reasoning model is trained to break down prompts into a series of steps, showing its thought process and assumptions. It’s like having a math teacher who explains every step of a problem, but without the chalk dust and coffee breath.

Flash Thinking excels in science and math benchmarks, with a 73.3% accuracy on AIME2024 (Math) and 74.2% on GPQA Diamond (Science). It also features a 1-million-token context window, enabling it to analyze long-form text with ease.

The model is available in the Gemini app, where it can interact with apps like YouTube, Google Maps, and Search. This integration makes it a powerful assistant for both personal and professional use.

Performance Benchmarks: How Do They Stack Up?

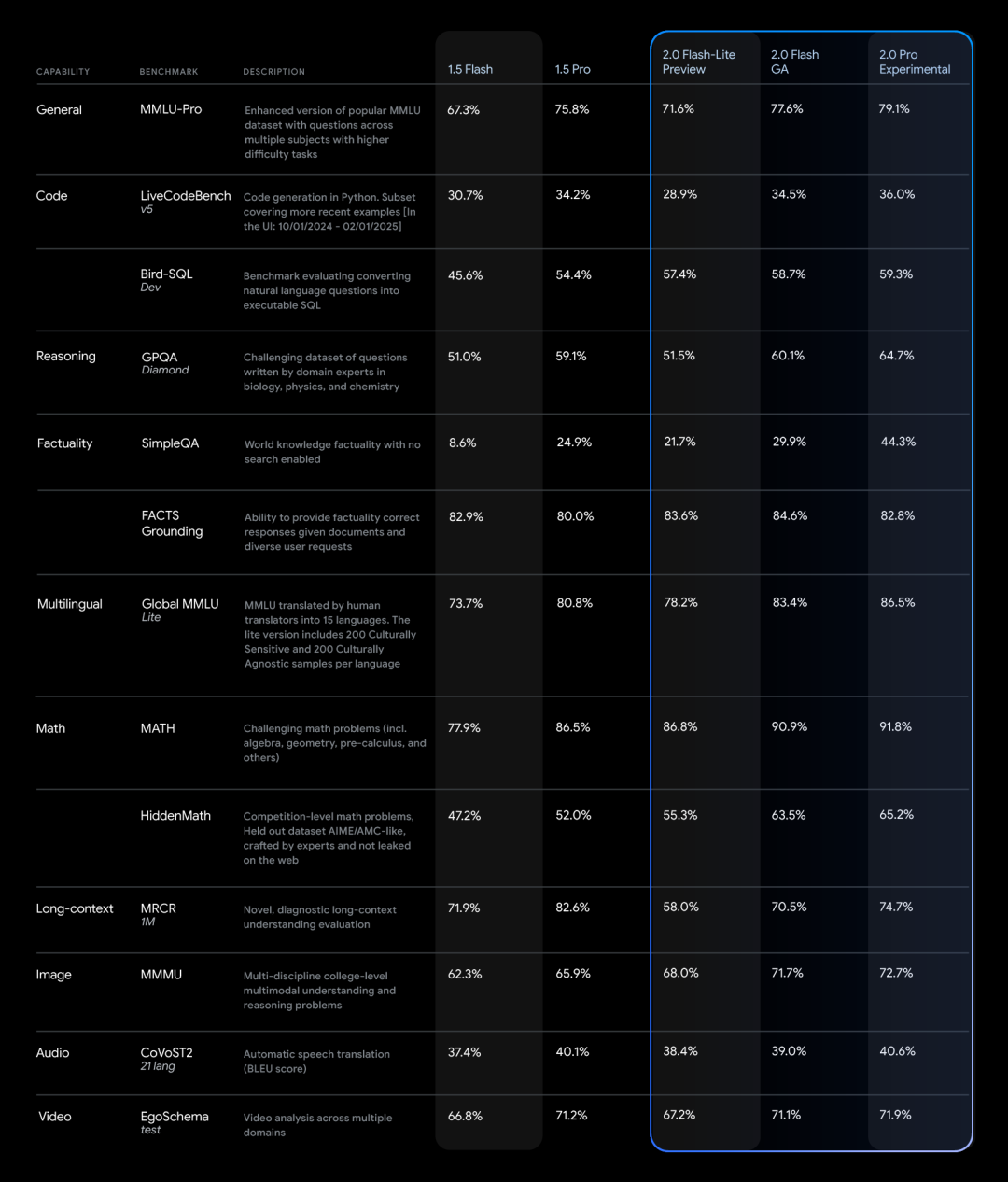

Google’s Gemini 2.0 models have shown impressive performance across various benchmarks:

- Gemini 2.0 Flash Thinking: 73.3% on AIME2024 (Math), 74.2% on GPQA Diamond (Science), and 75.4% on MMMU (Multimodal reasoning).

- Gemini 2.0 Pro: Outperforms Flash and Flash-Lite in reasoning, multilingual understanding, and long-context processing.

These results indicate that Google is not just playing catch-up but is actively pushing the boundaries of what AI can achieve.

Is Google Back on Top?

Google is also taking steps to ensure the safety and reliability of its models. The company is using reinforcement learning techniques to improve response accuracy and conducting automated security testing to identify vulnerabilities. This includes addressing indirect prompt injection threats, a growing concern in the AI community.

Looking ahead, Google plans to expand the Gemini 2.0 family with additional modalities beyond text, further solidifying its position in the AI landscape.

So, has Google done enough to reclaim its throne from DeepSeek? The answer is… maybe. While Gemini 2.0 models are undeniably impressive, DeepSeek’s versatility and affordability still make it a formidable competitor. However, Google’s focus on specialization, efficiency, and integration with its ecosystem gives it a unique edge.

In the end, the real winners are the developers and businesses who now have access to a wider array of powerful AI tools. Whether you’re team Gemini or team DeepSeek, one thing is clear: the AI race is far from over, and the next move could come from either side. Stay tuned, folks—it’s going to be a wild ride.