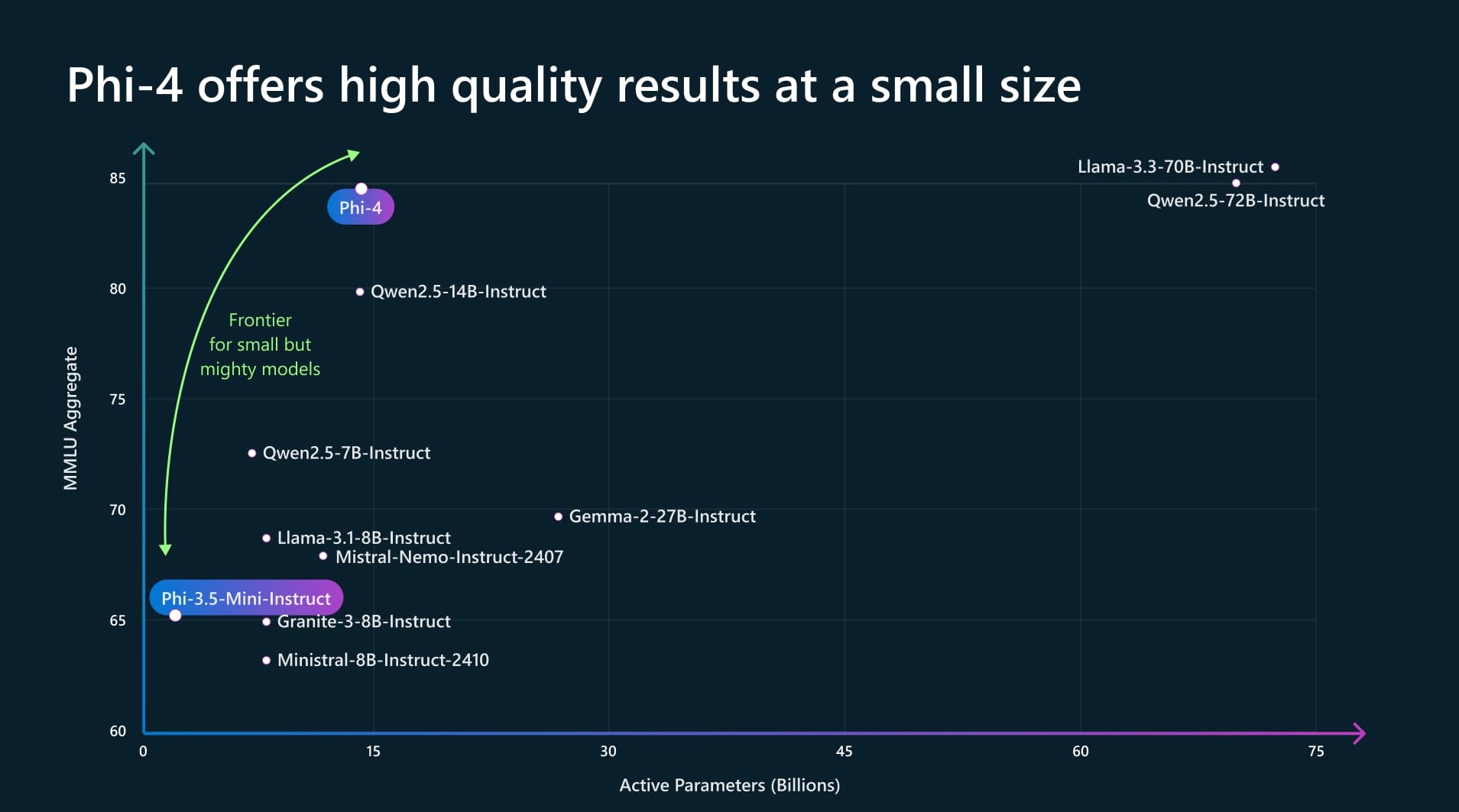

Phi-4: Microsoft's New Small Language Model for Complex Reasoning

Microsoft has recently unveiled Phi-4, the latest addition to its Phi family of small language models (SLMs). This new model marks a significant advancement in artificial intelligence, particularly in the realm of complex reasoning and mathematical problem-solving. With 14 billion parameters, Phi-4 is designed to outperform both comparable and larger models in tasks that require intricate reasoning capabilities.

Model Architecture and Design

Phi-4 is the fourth iteration in Microsoft's open-source language model series. It shares a similar architecture with its predecessor, Phi-3-medium, but incorporates several enhancements that contribute to its superior performance. Both models feature 14 billion parameters and can process prompts with up to 4,000 tokens. A notable improvement in Phi-4 is its upgraded tokenizer, which breaks down user prompts into tokens more efficiently, facilitating better text processing.

The model also benefits from an enhanced attention mechanism, allowing it to analyze up to 4,000 tokens of user input, double the capacity of the previous generation. This improvement is crucial for handling complex reasoning tasks that require the model to focus on the most relevant details within a large dataset.