The Fitting Room Goes Online: How Google’s AI Is Rebuilding the Interface Between Desire and Data

For a century, the fitting room was retail’s most private ritual, a small mirror between a person and their possible self. You stepped in, closed the curtain, and waited for reflection to decide who you might become.

Now, Google has quietly folded that mirror into the cloud.

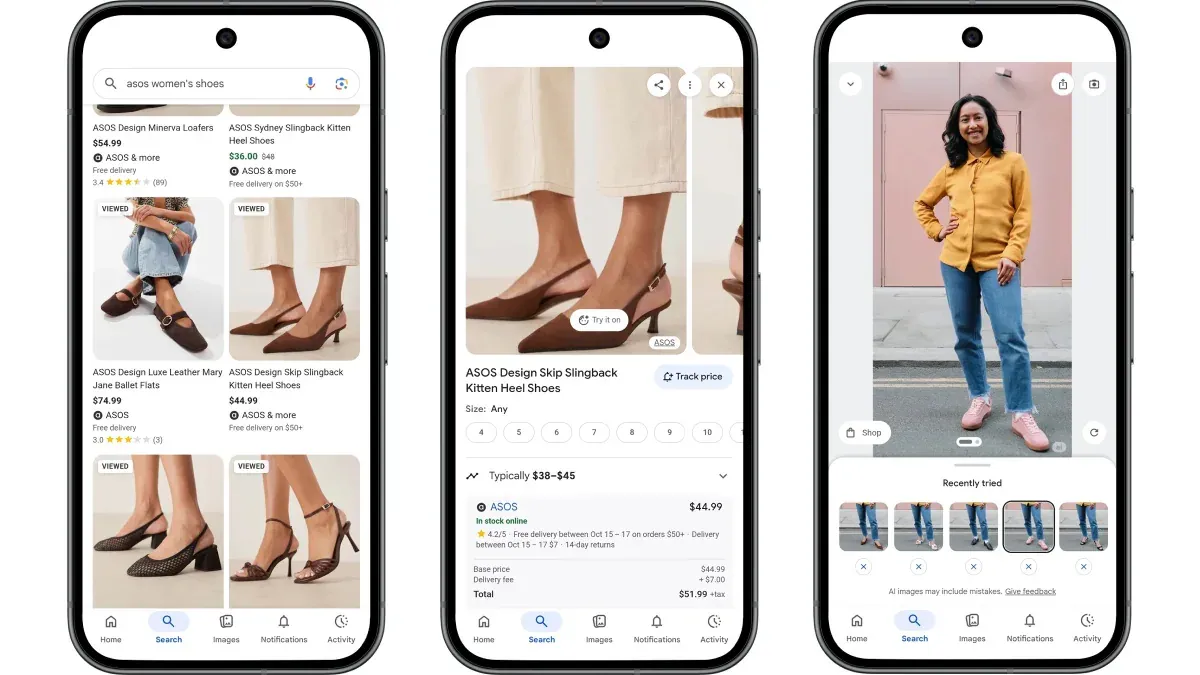

This week, it introduced an AI shoe try-on feature inside Google Shopping. Users can upload a full-body photo, and the system will render how any pair of sneakers, heels, or boots would look. No AR app, no scanning, and no “foot pics” are required. The model studies the contour of your leg, the weight of your stance, and how light should fall across your toes.

It is a small update with enormous implications: Google is not selling shoes; it is Google replacing the fitting room itself.

From AR Trick to Generative Realism

What Google built is not augmented reality. It is generative realism, a quiet technical leap that replaces projection with reconstruction.

When you “try on” a pair of shoes, the model does not simply paste pixels onto your feet. It rebuilds your lower body using a diffusion network trained on light, depth, and fabric behavior. It reconstructs limbs, preserves shadows, and renders weight distribution so that the result looks photographed rather than simulated.