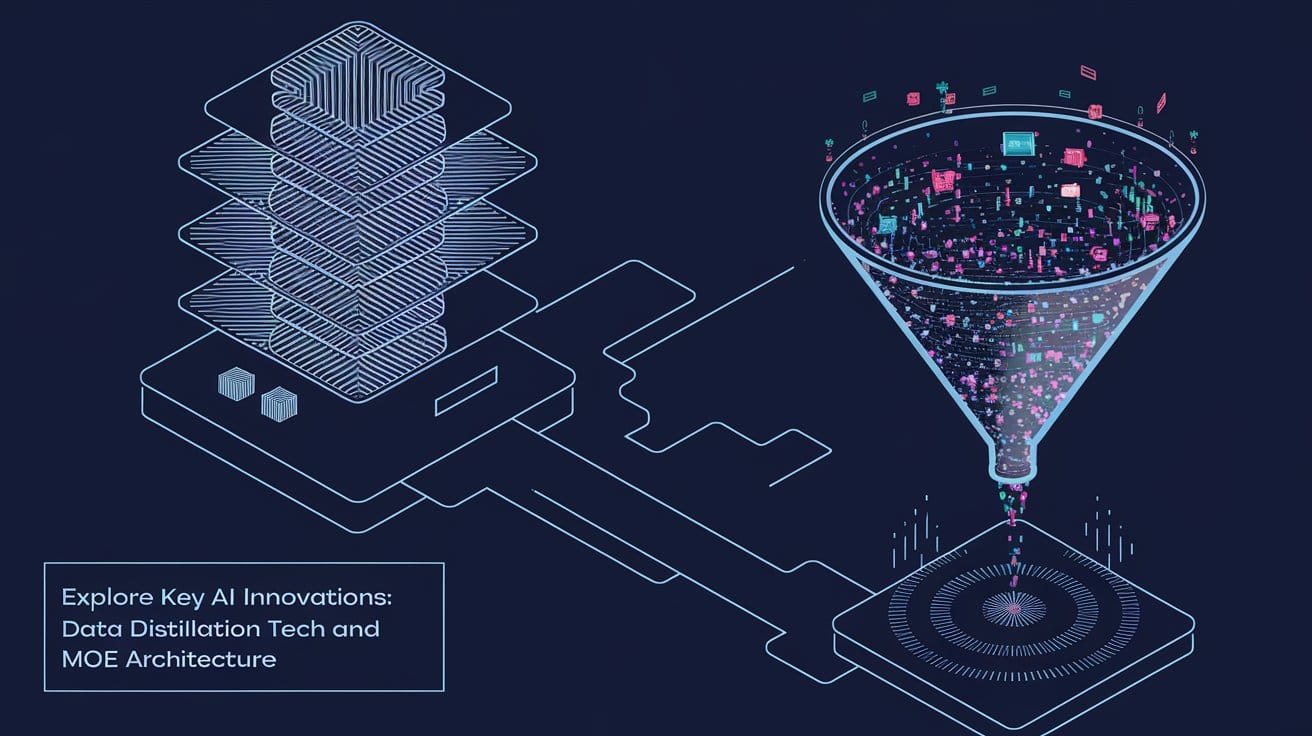

Explore DeepSeek’s Key Innovations: Data Distillation Tech and MoE Architecture

The development of DeepSeek V3 marks a transformative advancement in the field of artificial intelligence (AI). With 671 billion parameters, of which only 37 billion are activated per token, DeepSeek V3 exemplifies the potential of Mixture-of-Experts (MoE) architecture to optimize performance while minimizing computational overhead. Let's explore two critical aspects of DeepSeek V3: its data distillation technology and MoE architecture. These innovations enable the model to achieve state-of-the-art (SOTA) performance in coding, mathematics, and reasoning tasks, while maintaining cost-efficiency and scalability.