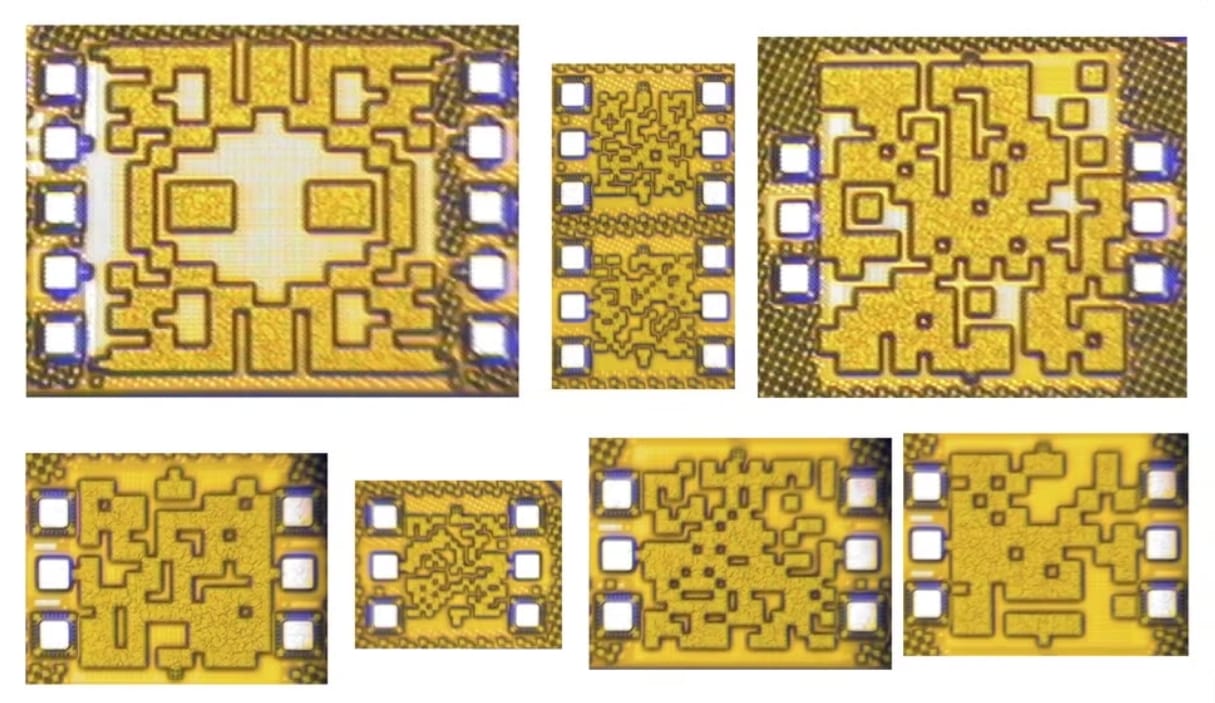

How Claude 3.5 Haiku Achieves 60% Faster Processing on AWS?

The integration of Anthropic's Claude 3.5 Haiku model with AWS Trainium2 and the subsequent model distillation in Amazon Bedrock marks a significant advancement in artificial intelligence (AI) technology. Let's delve into the capabilities and enhancements brought about by these integrations, focusing on the performance improvements, use cases, and the technological implications for businesses and developers.

Claude 3.5 Haiku on AWS Trainium2

Performance Enhancements

Claude 3.5 Haiku is recognized as one of the fastest models in the Claude 3 series, offering advanced capabilities in coding, tool use, and reasoning. The integration with AWS Trainium2 introduces a "latency-optimized mode," which runs 60% faster than previous iterations. This enhancement is achieved through Amazon Bedrock, which leverages the computational power of Trainium2 chips.