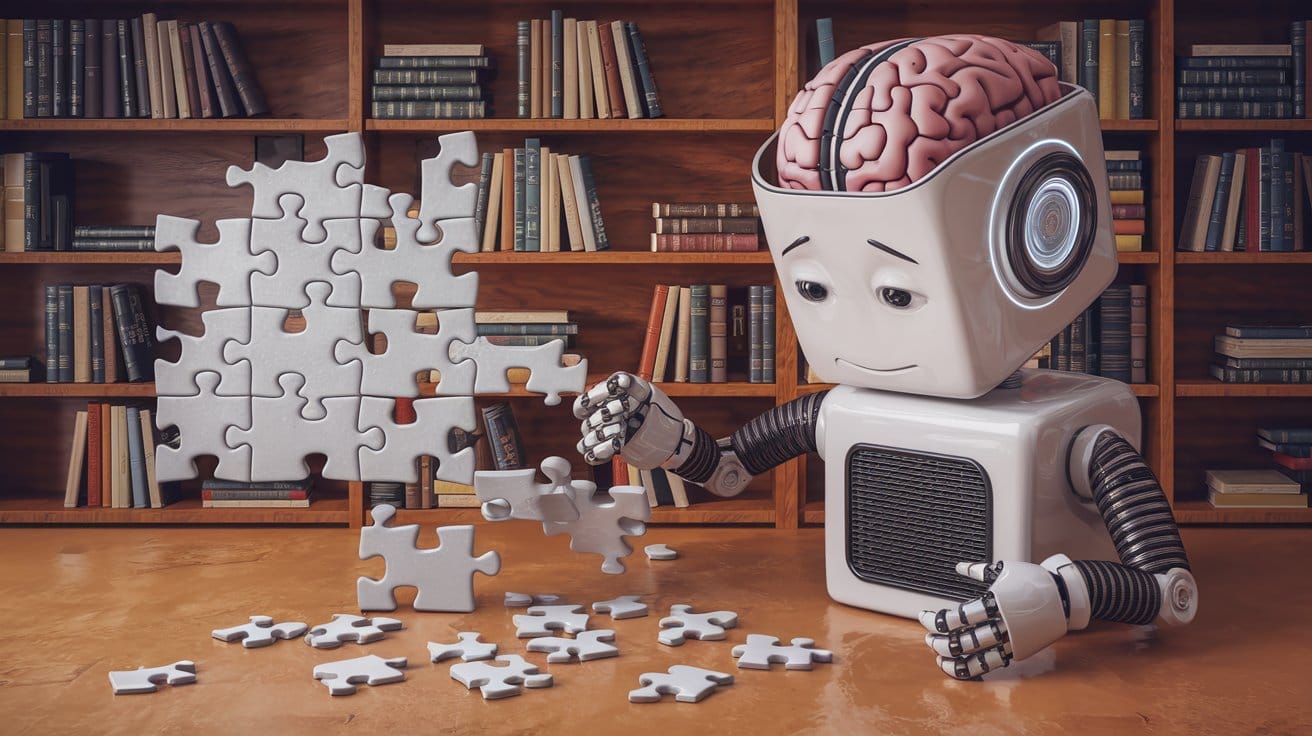

Why Large Language Models Struggle to Piece It All Together: The Compositional Conundrum

Large Language Models (LLMs) like GPT-3, GPT-4, and their AI cousins have been hailed as the prodigies of the artificial intelligence world. They can write poetry, generate code, answer trivia, and even explain quantum physics (or at least sound like they can). But, alas, even prodigies have their Achilles' heel. Recent research has revealed that these linguistic wizards stumble when faced with compositional reasoning tasks—problems that require assembling solutions from smaller sub-solutions, much like solving a jigsaw puzzle. Think of it as asking a chef to bake a cake, but instead of following a recipe, they must invent one by combining ingredients they've never used together before. Spoiler alert: the cake often doesn’t bake.

We’ll dive into the nitty-gritty of why LLMs face fundamental limitations in compositional reasoning, what researchers are doing to address these challenges, and whether we should start panicking about the future of AI (spoiler: not yet). Buckle up, because this is going to be a wild ride through benchmarks, trap problems, and the occasional Einstein riddle.