Why Small Language Models Are the Future of AI

In the rapidly evolving field of artificial intelligence (AI), the focus has traditionally been on developing larger and more complex models. However, a significant shift is underway, with increasing attention being paid to small language models (SLMs). These models, characterized by their reduced size and enhanced efficiency, are emerging as a pivotal development in AI.

Understanding Small Language Models

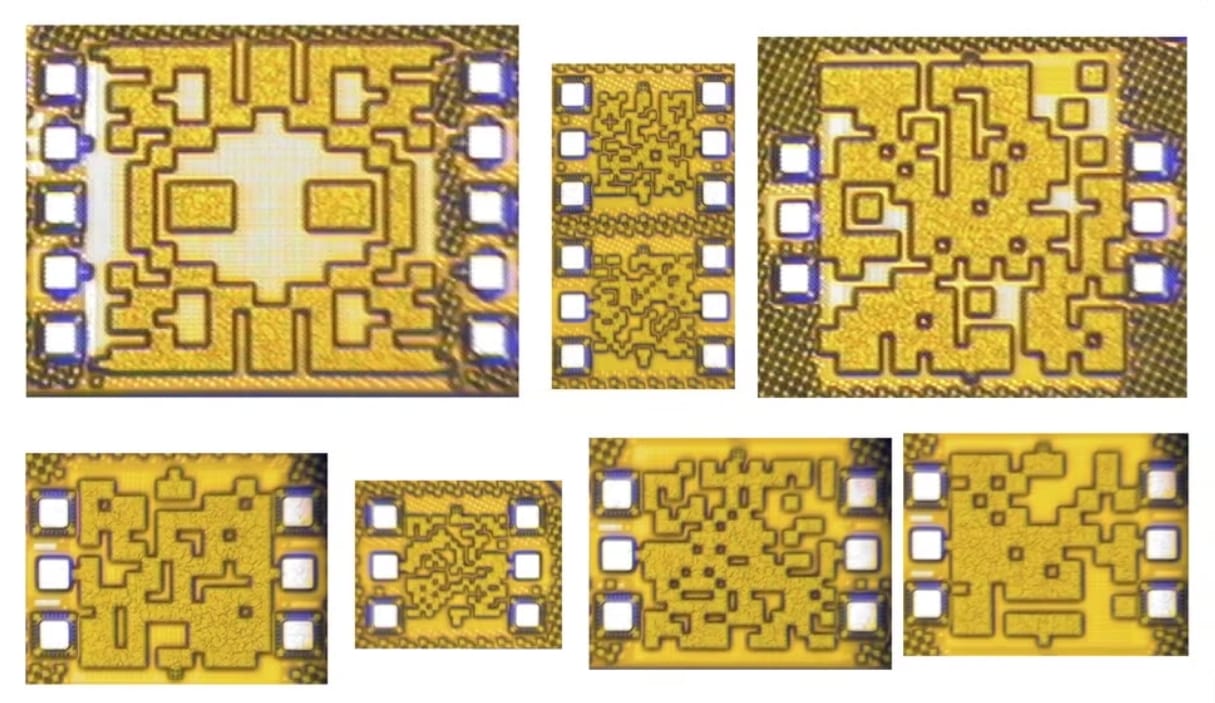

Small language models are essentially scaled-down versions of their larger counterparts. They are designed to be more efficient, compact, and resource-friendly, with fewer parameters that still allow them to perform effectively across a range of tasks. Typically, these models have fewer than 30 billion parameters, making them significantly smaller than large language models (LLMs) like GPT-4, which boasts hundreds of billions of parameters.